A new era of intelligent factories: How VLMs enable smarter, safer human–robot partnerships

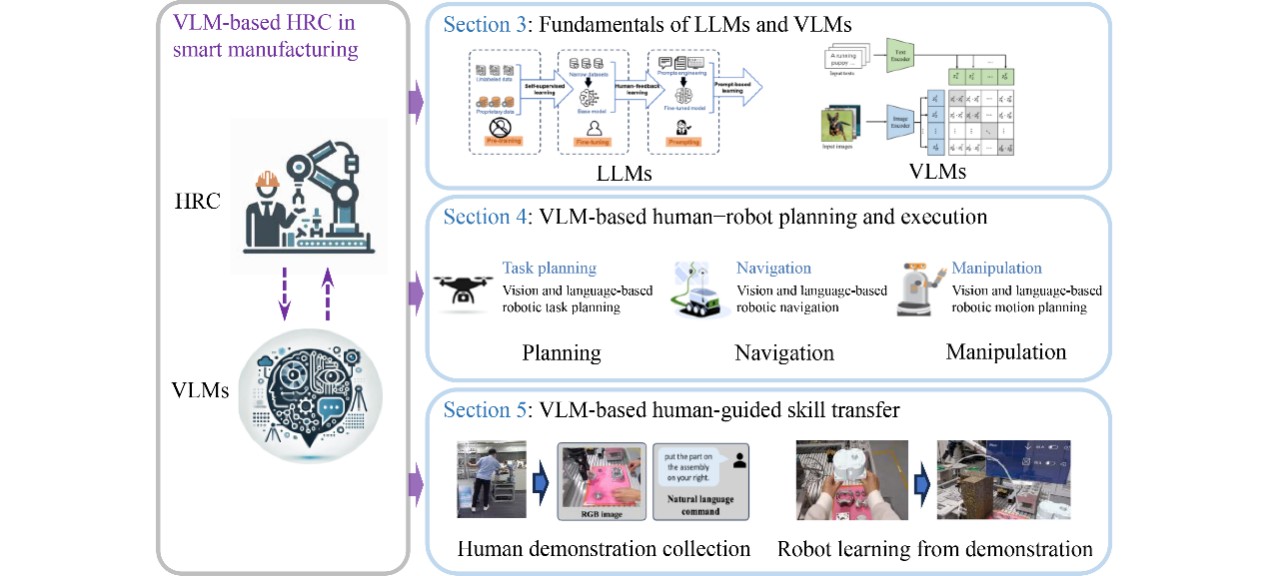

GA, UNITED STATES, December 26, 2025 /EINPresswire.com/ -- Vision-language models (VLMs) are rapidly changing how humans and robots work together, opening a path toward factories where machines can “see,” “read,” and “reason” almost like people. By merging visual perception with natural-language understanding, these models allow robots to interpret complex scenes, follow spoken or written instructions, and generate multi-step plans—a combination that traditional, rule-based systems could not achieve. This new survey brings together breakthrough research on VLM-enhanced task planning, navigation, manipulation, and multimodal skill transfer. It shows how VLMs are enabling robots to become flexible collaborators instead of scripted tools, signaling a profound shift in the future architecture of smart manufacturing.

Human–robot collaboration has long been promised as a cornerstone of next-generation manufacturing, yet conventional robots often fall short—constrained by brittle programming, limited perception, and minimal understanding of human intent. Industrial lines are dynamic, and robots that cannot adapt struggle to perform reliably. Meanwhile, advances in artificial intelligence, especially large language models and multimodal learning, have begun to show how machines could communicate and reason in more human-like ways. But the integration of these capabilities into factory environments remains fragmented. Because of these challenges, deeper investigation into vision-language-model-based human–robot collaboration is urgently needed.

A team from The Hong Kong Polytechnic University and KTH Royal Institute of Technology has published a new survey in Frontiers of Engineering Management (March 2025), delivering the first comprehensive mapping of how vision-language models (VLMs) are reshaping human–robot collaboration in smart manufacturing. Drawing on 109 studies from 2020–2024, the authors examine how VLMs —AI systems that jointly process images and language—enable robots to plan tasks, navigate complex environments, perform manipulation, and learn new skills directly from multimodal demonstrations.

The survey traces how VLMs add a powerful cognitive layer to robots, beginning with core architectures based on transformers and dual-encoder designs. It outlines how VLMs learn to align images and text through contrastive objectives, generative modeling, and cross-modal matching, producing shared semantic spaces that robots can use to understand both environments and instructions. In task planning, VLMs help robots interpret human commands, analyze real-time scenes, break down multi-step instructions, and generate executable action sequences. Systems built on CLIP, GPT-4V, BERT, and ResNet achieve success rates above 90% in collaborative assembly and tabletop manipulation tasks. In navigation, VLMs allow robots to translate natural-language goals into movement, mapping visual cues to spatial decisions. These models can follow detailed step-by-step instructions or reason from higher-level intent, enabling robust autonomy in domestic, industrial, and embodied environments. In manipulation, VLMs help robots recognize objects, evaluate affordances, and adjust to human motion—key capabilities for safety-critical collaboration on factory floors. The review also highlights emerging work in multimodal skill transfer, where robots learn directly from visual-language demonstrations rather than labor-intensive coding.

The authors emphasize that VLMs mark a turning point for industrial robotics because they enable a shift from scripted automation to contextual understanding. “Robots equipped with VLMs can comprehend both what they see and what they are told,” they explain, highlighting that this dual-modality reasoning makes interaction more intuitive and safer for human workers. At the same time, they caution that achieving large-scale deployment will require addressing challenges in model efficiency, robustness, and data collection, as well as developing industrial-grade multimodal benchmarks for reliable evaluation.

The authors envision VLM-enabled robots becoming central to future smart factories—capable of adjusting to changing tasks, assisting workers in assembly, retrieving tools, managing logistics, conducting equipment inspections, and coordinating multi-robot systems. As VLMs mature, robots could learn new procedures from video-and-language demonstrations, reason through long-horizon plans, and collaborate fluidly with humans without extensive reprogramming. The authors conclude that breakthroughs in efficient VLM architectures, high-quality multimodal datasets, and dependable real-time processing will be key to unlocking their full industrial impact, potentially ushering in a new era of safe, adaptive, and human-centric manufacturing.

References

DOI

10.1007/s42524-025-4136-9

Original Source URL

https://doi.org/10.1007/s42524-025-4136-9

Funding Information

This work was mainly supported by the funding support from the Research Institute for Advanced Manufacturing (RIAM) of The Hong Kong Polytechnic University (1-CDJT); the Intra-Faculty Interdisciplinary Project 2023/24 (1-WZ4N), by the Research Committee of The Hong Kong Polytechnic University; the State Key Laboratory of Intelligent Manufacturing Equipment and Technology, Huazhong University of Science and Technology (IMETKF2024010); Guangdong–Hong Kong Technology Cooperation Funding Scheme (GHX/075/22GD); Innovation and Technology Commission (ITC); the COMAC International Collaborative Research Project (COMAC-SFGS-2023-3148); and the General Research Fund from the Research Grants Council of the Hong Kong Special Administrative Region, China (Project Nos. PolyU15210222 and PolyU15206723); Open access funding provided by the Hong Kong Polytechnic University.

Lucy Wang

BioDesign Research

email us here

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.